Are you tired of wrestling with the tangled mess of infrastructure that modern cloud applications demand? Configuring message queues, wrangling service discovery, and endlessly reinventing the wheel – it feels more like infrastructure engineering than actual software development.

Wouldn’t it be amazing to cut through the complexity and go back to writing simple code? Well, hold on to your keyboards, because a revolution is brewing…

On a hurry to try it out? Sign up to the developer preview for free today at console.dispatch.run!

Software always starts with a beautiful idea, built and tested on our local machines, and shipped to production as a simple application. As time passes, more features creep in, we’re cornered into introducing complexity to handle growing user traffic or the increasing demand for performance and reliability. And what of those new contributors joining the team that we’re desperately trying to teach the value of keeping things simple?

Before we know it, we’re operating a mesh of microservices, queues, databases, and countless layers of abstraction and dependencies.

With each change comes more moving parts, and with it, the failure modes multiply. We’re constrained to fit our elegant designs into a clunky patchwork of infrastructure to deal with rate limits, network timeouts, state management, and so on.

Remember the joy of bringing your idea to life and shipping that simple initial version?

Challenging the status quo often means returning to the drawing board and figuring out what fundamental pieces are missing to unlock the next step function. Doing so requires experience, insight, and a healthy dose of recklessness!

We’re a team that’s logged some serious overtime keeping the lights on of large-scale distributed systems. Our past lives building and scaling infrastructure at companies like Segment, Facebook, and Twitch made us intimately familiar with the challenges these systems bring. Let’s just say we’ve learned a few tricks along the way!

Our mission is to make distributed systems development radically simpler – think fewer late nights fueled by frustration and takeout — so software developers can focus on delivering customer value. And our commitment to open-source means we’re all about sharing those hard-won lessons.

Kick-starting our journey, today we’re launching Dispatch and laying the foundation of a new programming model with a first building block: a serverless orchestrator to power your distributed applications!

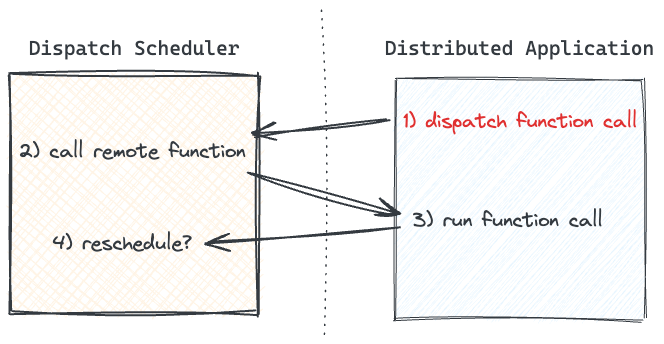

We designed Dispatch for reliability, scalability, and simplicity. On one side is a serverless orchestrator we host, and on the other, the application you run. An SDK integrating within your code does the heavy lifting of bridging the two systems.

This architecture has many benefits: it minimizes the operational complexity on the application side because the orchestrator handles all the queuing, scheduling, and state management. It also adapts to a wide range of deployment models. Whether you’re running on AWS, GCP, Azure, or anywhere else, as long as your application can connect to Dispatch, we’ve got you covered!

Along with the service, we’re launching the first SDK enabling Python developers to build on Dispatch.

Let’s consider an application that sends emails to its users with Resend. If it exceeds the Resend rate limit, the delivery must be retried later so the email is not lost. The program would likely contain the following function:

import resend

@dispatch.function

def send_email(to: str, subject: str, html: str) -> None:

resend.Email.send({

'to': to,

'subject': subject,

'html': html,

...

})Whenever the Dispatch function encounters a temporary error like a rate limit, the operation is rescheduled with automatic adjustment of the back-off delay.

Without Dispatch, the asynchronous email delivery could get lost if the program aborted too early, or if it encountered an error. By applying a simple decorator to the function, the operation is now guaranteed to run to completion.

A major barrier developers encounter trying to use existing solutions is having to go all-in and build their application from the ground up with a framework. We knew that to fulfill its purpose, Dispatch had to do better, so we designed it to allow incremental adoption into existing programs.

Wherever the program has to send an email, instead of calling send_email directly, it dispatches the call with:

send_email.dispatch(to, subject, html)There is no need to configure the retry policy, the serverless orchestrator automatically detects concurrency and rate limits based on the volume of errors it observes. It self-throttles to maximize utilization while staying within the limits of the system.

Reliability doesn’t get much simpler than that!

You’re excited about Dispatch but your primary programming language isn’t Python? Join our Discord channel and let us know which language you would like us to support!

At the heart of the Dispatch programming model are simple Python functions, but with a twist! They are automatically distributed across a production fleet, and can be suspended and resumed on any instance of the application.

This capability enables developers to express arbitrarily complex workflows directly in code as if they were programming a local application. All the complexity of scheduling and tracking the progress through steps is delegated to the orchestrator.

You can think of it as your distributed application behaving almost like a single computer, with processing and state transparently moving around. This is Durable Execution at the tip of your fingers!

Back to our example applications, but this time we have a use case for sending email notifications to multiple users, then wait for all those deliveries to complete so we can mark them as done in our database. This is a typical fan-out and fan-in workflow, where the first steps run concurrently and are awaited on before moving on, and here is how it would be expressed:

@dispatch.function

async def notify_users(opid: str, emails: list[str]) -> None:

# Calling the dispatch function creates an asynchronous invocation but does

# not block execution here.

pending = [send_email(email) for email in emails]

# Wait for all asynchronous operations to complete so we can gather their

# results.

results = await gather(*pending)

# The last step of the workflow is reporting on the status of emails

# delivered to our users.

await db_update_notifications(opid, emails, results)Note that durability and reliability features compound here. Each concurrent function call is independent and will be retried if it encounters a failure, and the caller will only be resumed when all the functions it is awaiting on have run to completion.

Dispatch provides a simple yet powerful programming model to develop distributed applications. By removing the need to manage queues, databases, and state machines, it allows developers to focus on creating value instead of dealing with infrastructure.

You can sign up to the developer preview for free today at console.dispatch.run!

We’ve barely scratched the surface here, so follow us on Twitter and join our Discord channel to stay tuned on our upcoming article about Distributed Coroutines, the key primitive at the heart of the Dispatch programming model!

Join the community, sign up and start building.